- 8 Minutes to read

- Print

- DarkLight

- PDF

Setting up Google BigQuery as a Target

- 8 Minutes to read

- Print

- DarkLight

- PDF

Overview

Before you use this guide, please make sure you’ve signed up for the Google Cloud Platform and that you have a console admin user.

If you don’t have one of these prerequisites, click here.

With Rivery you can either choose to leverage the automatically created service account and bucket for your account or set your own.

This guide will walk through creating the following in order to create a connection to BigQuery within Rivery:

For Rivery default service account and bucket:

- Enable BigQuery API

- Grant permissions to the service account on your project.

- Create a BigQuery dataset

For custom service account and bucket:

- Google Cloud Service Account

- Enable Cloud Storage and BigQuery APIs

- Google Cloud Storage Bucket

- Create a BigQuery dataset

At the end of this guide, you will be able to quickly connect your BigQuery account to Rivery from inside the tool.

Working with Rivery default file-zone (default service account and bucket)

Rivery automatically creates a dedicated service account and GCS bucket for your ease of use.

The service account only has access to the dedicated bucket created.

Enable BigQuery API

- Go to API's & Services on the Google Cloud Console menu pane -> Click on Library.

- Search for Google Cloud Storage JSON API and click on Enable.

- Search for BigQuery API and click on Enable .

Grant permission to the service account

Rivery uploads your source data into a dedicated Google Cloud Storage bucket. Your BigQuery project then pulls in data from this storage bucket. To access the BigQuery project, you will need to grant permission to the service account to the relevant BigQuery project.

Your service account is located in the BigQuery create connection screen:

- Sign in to Google Cloud Platform Console. Make sure you are on the desired project.

- Go to IAM & Admin -> IAM.

- Click on +add.

- In the new window add the service account created by Rivery.

You can find the service account in the BigQuery connection screen - Choose the BigQuery Admin role and click save.

Configure Your BigQuery in Rivery

For our last step we will configure a BigQuery connection within Rivery:

- Log into Rivery.

- Create a new connection for your BigQuery instance:

- Go to Connections.

- Select New Connection.

- From the source list, choose Google BigQuery

- Next, enter the credentials information for your Google Platform Service Account:

- Connection Name

- Description (optional)

- Project Id (can be found on the Google Cloud Platform Home section)

- Project Number (optional - can be found on the Google Cloud Platform Home section)

- Region - Where your default bucket and dataset(if created VIA a river) will be created (default is USA)

Click 'Test Connection' at the bottom to test! Once a valid connection is made, save the connection.

If you cannot get a valid connection set up, contact helpme@rivery.io for support.

That's it, you now have a working connection to BigQuery you can use in RIvery.

If you'd like to create a dataset (optional) please see the create a BigQuery Dataset section below

Working with Rivery custom file-zone (custom service account and bucket)

Create a Service Account User for Rivery

Rivery uploads your source data into a Google Cloud Storage bucket. Your BigQuery project then pulls in data from this storage bucket. To access the relevant storage bucket and BigQuery project, you will need to first create a user in the Google Cloud Platform Console that has access to the relevant bucket and the relevant BigQuery project.

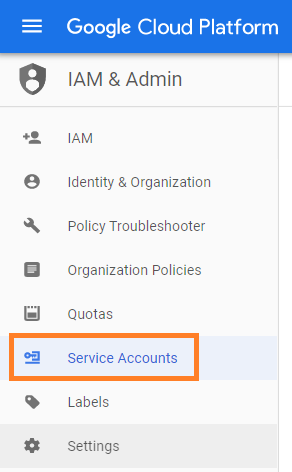

In order to create a new service account in Google Cloud Platform,

please make sure your user has a ServiceAccountAdmin role.

Now, let’s create a service account in the GCP Console:

Sign into Google Cloud Platform Console .

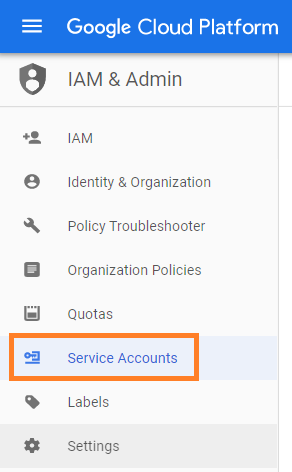

Go to IAM & Admin -> Service account

Click on CREATE SERVICE ACCOUNT .

Set your Service Account name (i.e: RiveryUser)

Set Roles

Storage Admin

BigQuery AdminCopy your Account Service ID / Email from the service account list. You will enter this later in a Rivery connection.

Mark the Checkbox - Furnish a new private key and in the key type options, choose JSON.

Click on the CREATE button. Your JSON secret key will download. Keep track of this key file as you will need to import it in a forthcoming step.

Enable Cloud Storage and BigQuery API

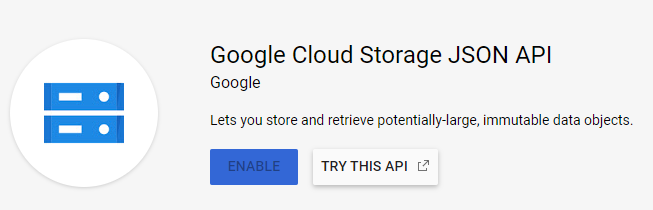

Go to API's & Services on the Google Cloud Console menu pane -> Click on Library.

Search for Google Cloud Storage JSON API and click on Enable .

Search for BigQuery API and click on Enable .

Create a Google Cloud Storage Bucket

Rivery needs a Google Cloud Storage bucket to operate as a file-zone before your data is uploaded to BigQuery.

Sign into the Google Cloud Platform Console .

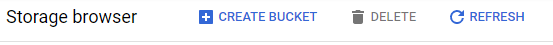

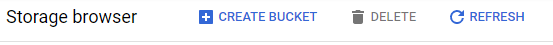

In the menu pane on the left, go to Storage -> Browser.

- Click on the CREATE BUCKET button.

- In the wizard:

- Set Bucket Name (example:project_name_rivery_file_zone)

- Set your Bucket to Region and choose your preferred location

- Click the CREATE button

Create a BigQuery Dataset

You can leverage an existing dataset or have Rivery automatically create a dataset for you bymanually inserting the dataset name in yoursource to target river.

However, you may choose to manually create your data set by following these steps:

Sign in to the Google Cloud Platform Console.

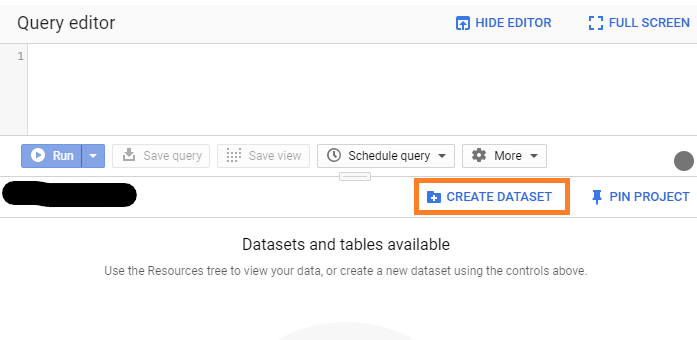

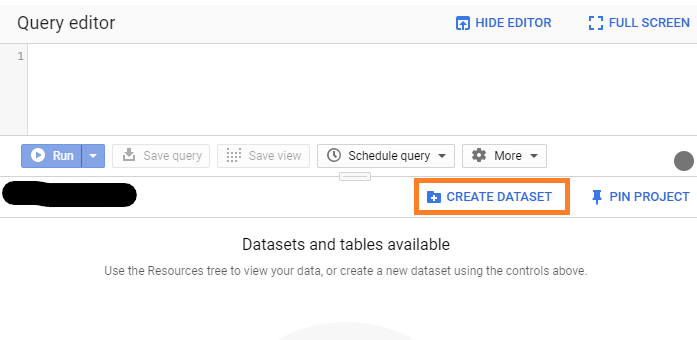

On the menu pane to the left, go to BigQuery. The Google BigQuery editor will open.

Go to the left sidebar -> Select your Project Name

To the right of the page, in the bottom half of the editor, click on the CREATE DATASET button:

In the wizard:

- Set the Dataset ID

- Set the region, please make sure the region corresponds with the Google Cloud Storage bucket region you are going to use.

More details here: https://cloud.google.com/storage/docs/locations - Click the Create dataset button.

Configure your BigQuery in Rivery

Next, we need to configure a connection to BigQuery within Rivery:

- Log into Rivery.

- Create a new connection for your BigQuery instance:

- Next, enter the credentials information for your Google Platform Service Account:

- Connection Name

- Description (optional)

- Project Id (can be found on the Google Cloud Platform Home section)

- Project Number (optional - can be found on the Google Cloud Platform Home section)

- Region - select the region your bucket is locatedYour bucket and dataset region must be compatible!

For more information:Please Note:Please Note:

- Enable the custom file zone and enter the credentials:

- Service Account email - the Service Account Id that you copied from the Service Account Wizard

- Choose file - upload the JSON file that was generated when you created a Service Account

- Default Bucket- click on the blue arrow button to pull all available buckets or insert one manually,

Click 'Test Connection' at the bottom to test! Once a valid connection is made, save the connection.

If you cannot get a valid connection set up, contact helpme@rivery.io for support.

Additional Options

Data Type Widening (Schema Shift)

When the data type of a column ischanged in the Source table, the corresponding Target column will be adjusted to accommodate the "wider" data type by default.

For instance, if the original field changes from an Integer to a String, the target data type will be modified to a String since it canencapsulate integer values. On the other hand, if the column has been changed from a String to a Date, it will retain its String data type in the target.

The toggle located in the Additional Options section guarantees that these changes will take effect as the default behavior. If you wish to disable this option and cause the River to fail in the case of a data type mismatch, you can set the toggle to false.

Please Note:

To receive notifications about these changes, it is advisable to set the "On Warning" toggle to the true in the settings tab.

Type Mapping

While extracting your data, we match BigQuery data types to Rivery data types. If a data type isn't supported, we will automatically map it as a String type.

The mapping of BigQuery data types to Rivery-compatible types is shown in the table below:

| BigQuery Type | Rivery Type |

| STRING | STRING |

| INTERGER | INTERGER |

| FLOAT | FLOAT |

| BOOLEAN | BOOLEAN |

| TIMESTAMP | TIMESTAMP |

| TIME | TIME |

| VARIANT | VARIANT |

| RECORD | RECORD |

Scenarios of Data Type Conflicts

In instances where a datatype mismatch occurs, for example, when the datatype of an existing column in the source table is modified, the datatype of the corresponding column in the target table will be determined based on certain defined rules, thereby resolving the conflict. These rules ensure that the most comprehensive data type is chosen to maintain data integrity and avoid loss of information.

Examples of Data Type Conflicts

The table below outlines various scenarios of data type conflicts and the resolution rules. The Inferred Type column represents the chosen data type in the target table when there's a conflict.

| Conflicting Types | Inferred Type | Example Scenario |

|---|---|---|

| TIMESTAMP vs. DATE | TIMESTAMP | If a column in the source, initially of TIMESTAMP type, is changed to DATE, it will be converted into TIMESTAMP in the target. |

| SMALLINT vs. BOOLEAN | SMALLINT | A conflict between SMALLINT and BOOLEAN types in the source column will lead to the target column adopting the SMALLINT type. |

| INTEGER vs. (SMALLINT / BOOLEAN) | INTEGER | If an INTEGER is updated to SMALLINT or BOOLEAN in the source, it will be promoted to INTEGER in the target. |

| BIGINT vs. (INTEGER / SMALLINT / BOOLEAN) | BIGINT | If a column of BIGINT type is changed to SMALLINT, BOOLEAN, or INTEGER in the source, it will be elevated to BIGINT in the target. |

| DECIMAL vs. (INTEGER / BIGINT / SMALLINT / BOOLEAN) | DECIMAL | A column that is INTEGER or DECIMAL in the source will be transformed into DECIMAL. |

| DOUBLE PRECISION vs. (INTEGER / BIGINT / SMALLINT / BOOLEAN) | DOUBLE PRECISION | If there is a conflict between DOUBLE PRECISION and SMALLINT in the source column, the target column will adopt DOUBLE PRECISION. |

| REAL vs. (DOUBLE PRECISION / DECIMAL / INTEGER / BIGINT / SMALLINT / BOOLEAN) | REAL | If a column of DOUBLE PRECISION type is altered to REAL type in the source, it will remain as REAL in the target. |

| FLOAT vs. (INTEGER / BIGINT / SMALLINT / DECIMAL / REAL / DOUBLE PRECISION / BOOLEAN) | FLOAT | A source column initially of FLOAT type that is updated to INTEGER will remain as FLOAT in the target. |

| VARCHAR vs. ALL DATATYPES | VARCHAR | If a column in the source was VARCHAR type and has been updated to INTEGER type, the target column will still retain the VARCHAR type. |

| OBJECT vs. ALL DATATYPES | OBJECT | If a source column is initially set as OBJECT type and later changed to DATE or INTEGER, it will still be treated as OBJECT type in the target. |

Please Note:

VARCHAR and OBJECT have higher priority and will override other data types in conflicts.

Known Issues

- Sometimes the "Storage Admin" type user role does not have a certain permission "storage.buckets.get" given to it by default:

In this case, you will have to edit your GCP user roles edit your GCP user roles by duplicating that "Storage Admin" role by clicking

{height="" width=""}, making sure the custom role you create has the storage.buckets.get permission, then assigning your service account this custom role instead of the "Storage Admin" (See the "Create a Service Account User for Rivery" section of this document).

{height="" width=""}, making sure the custom role you create has the storage.buckets.get permission, then assigning your service account this custom role instead of the "Storage Admin" (See the "Create a Service Account User for Rivery" section of this document).

Requirement for Consistent Connection Locations for Source and Target in BigQuery:

When using Bigquery as both the Source and Target, it's crucial to ensure that the connections originate from the same location (region). Failure to do so will result in a loading error displayed by Bigquery:Loading to BigQuery failed: BigQuery failed to load job. Error: Cannot read and write in different locations

.PNG)

.JPG)